Setup a wicked Grafana Dashboard to monitor practically anything

https://denlab.io/setup-a-wicked-grafana-dashboard-to-monitor-practically-anything/

I recently made a post on Reddit showcasing my Grafana dashboard. I wasn’t expecting it to really get any clicks, but as a labor of love I thought I’d spark the interest of a few people. I got a significant amount of feedback requesting that I make a blog post to show how I setup the individual parts to make my Grafana dashboard sparkle.

Let’s start with the basics. What the heck is Grafana? Well this image should give you an idea of what you could be able to make, or make better with Grafana.

I use this single page to graph all my the statistics I care about glancing at in a moment’s notice. It allows me to see a quick overview of how my server is doing without having to access five or six different hosts to see where things are at. Furthermore, it graphs these over time, so you can quickly see how your resources are managing the workload you have on the server at any given point. So if you’re sold – let’s get started! There is a lot to cover, so I’ll start with laying out the basics to help new users understand how it all ties together.

Let’s start with terminology and applications that will be used in this tutorial.

- Grafana – The front end used to graph data from a database. What you see in the image above, and by far the most fun part of the whole setup.

- Grafite – A backend database supported by Grafana. It has a lot of neat custom features that make it an attractive option for handling all of the incoming data.

- InfluxDB – Another backend database supported by Grafana. I prefer this database for speed to implement, my own prior knowledge, and as a byproduct of a few tutorials I dug up online. This tutorial will be showing you how to setup services using InfluxDB, however I’m sure that Grafite would work equally as well if you want to color outside of the box.

- SNMP – Simple Network Management Protocol. I use this protocol as a standard query tool that most network devices natively support, or can have support added. SNMP uses OIDs to query data, but don’t worry, you don’t have to have any special addons if you don’t want them. I recommend you look up the specific SNMP datasheet for your device, as some devices have custom OIDs that give you very interesting graphable information! I’ll explain this more later.

- IPMI – Intelligent Platform Management Interface. This is used to pull CPU temperatures and fan speeds from my Supermicro motherboard. Most server grade motherboards have a management port with SNMP support. Look it up, you’ll be surprised the information you can get!

- Telegraf – During the course of this article you’ll see that I use a lot of custom scripts to get SNMP/IPMI data. Another option would be to use Telegraf. I eventually will move most of my polling to Telegraf, but for right now I’m using Telegraf purely for docker statistics. I’ll explain how to set it up here.

- Collectd – CollectD is an old popular favorite. It’s an agent that runs on the baremetal server or in a VM that will automatically write data into your InfluxDB database. Very cool – but I don’t use it, because I prefer to limit installing extra tools on every server to monitor them.

I’ll walk you through how I setup the following monitoring applications:

- ESXi CPU and RAM Monitoring via SNMP and a custom script for RAM

- Supermicro IPMI for temperature and fan speed monitoring

- Sophos SNMP for traffic load monitoring

- UPS/Load monitoring with custom scripts and SNMP through a Synology NAS and CyberPower Panel

- Docker Statistics for CPU and RAM monitoring via Telegraf

- Synology Temperature and Storage information using SNMP

- Plex Current Transcodes using a simple PHP script

Installing Grafana and InfluxDB in Docker

If you want to install this vanilla – there are plenty of online resources for those guides. For the purposes of this guide we’ll be installing these in Docker. Why? Well it’s easy to setup, easy to maintain, and easy to back up. I highly recommend you spend a few of your lab nights playing around with Docker to see how powerful it really is!

Installing Grafana

Create a persistent storage volume. This ensures that when you destroy and recreate the grafana docker to upgrade it, your configuration will be retained.

docker run -d -v /var/lib/grafana --name grafana-storage busybox:latest

Create and start the Grafana docker. At this point Grafana will be fully installed and ready to configure.

docker create \ --name=grafana \ -p 3000:3000 \ --volumes-from grafana-storage \ -e "GF_SECURITY_ADMIN_PASSWORD=hunter2" \ grafana/grafana

docker start grafana

Docker upgrade script

I like to drop these in ~/bin/ so that you can just run the command to upgrade at any point. It’s also a good reminder of how you initially configured the docker image.

Create the upgrade script

vi ~/bin/updategrafana

#!/bin/bash

# Define a timestamp function

timestamp() {

date +"%Y-%m-%d_%H-%M-%S"

}

timestamp

echo "Pulling Latest from grafana/grafana"

docker pull grafana/grafana

echo "Stopping grafana Container"

docker stop grafana

echo "Backing up old grafana Container to grafana_$(timestamp)"

docker rename grafana grafana_$(timestamp)

echo "Creating and starting new grafana Server"

docker create \

--name=grafana \

-p 3000:3000 \

--volumes-from grafana-storage \

-e "GF_SECURITY_ADMIN_PASSWORD=hunter2" \

grafana/grafana

docker start grafana

Autostart the image – create a systemd startup script

vi /lib/systemd/system/grafana.service

[Unit] Description=grafana container Requires=docker.service After=docker.service [Service] User=dencur Restart=on-failure RestartSec=45 ExecStart=/usr/bin/docker start -a grafana ExecStop=/usr/bin/docker stop -t 2 grafana [Install] WantedBy=multi-user.target

Enable on startup

systemctl enable grafana.service

If you want to start it up for testing

systemctl start grafana

Installing InfluxDB

Create your local storage

mkdir -p /docker/containers/influxdb/conf/ mkdir -p /docker/containers/influxdb/db/

Check your folder ownership (optional)

This may not be required depending on what user you were when you made the folders above and what OS you use. You’ll want this path to be owned by your execution user for the docker servers. Replace user below with your username

chown user:user -R /docker

Generate the default configuration

You may want to take a look after this after after you generate it. There are some useful options to note.

docker run --rm influxdb influxd config > /docker/containers/influxdb/conf/influxdb.conf

Create and start the InfluxDB Container

docker create \ --name influxdb \ -e PUID=1000 -e PGID=1000 \ -p 8083:8083 -p 8086:8086 \ -v /docker/containers/influxdb/conf/influxdb.conf:/etc/influxdb/influxdb.conf:ro \ -v /docker/containers/influxdb/db:/var/lib/influxdb \ influxdb -config /etc/influxdb/influxdb.conf

docker start influxdb

Create home database

Check out your instance at localhost:8083. You’ll want to create the home db, to do that just go into the query field and enter:

CREATE DATABASE home

Create the upgrade script

vi ~/bin/updateinfluxdb

#!/bin/bash

# Define a timestamp function

timestamp() {

date +"%Y-%m-%d_%H-%M-%S"

}

timestamp

echo "Pulling Latest from influxdb"

docker pull influxdb

echo "Stopping influxdb Container"

docker stop influxdb

echo "Backing up old influxdb Container to influxdb_$(timestamp)"

docker rename influxdb influxdb_$(timestamp)

echo "Creating and starting new influxdb Server"

docker create \

--name influxdb \

-e PUID=1000 -e PGID=1000 \

-p 8083:8083 -p 8086:8086 \

-v /docker/containers/influxdb/conf/influxdb.conf:/etc/influxdb/influxdb.conf:ro \

-v /docker/containers/influxdb/db:/var/lib/influxdb \

influxdb -config /etc/influxdb/influxdb.conf

docker start influxdb

Autostart the image – create a systemd startup script

vi /lib/systemd/system/influxdb.service

[Unit] Description=influxdb container Requires=docker.service After=docker.service [Service] User=dencur Restart=on-failure RestartSec=45 ExecStart=/usr/bin/docker start -a influxdb ExecStop=/usr/bin/docker stop -t 2 influxdb [Install] WantedBy=multi-user.target

Enable on startup

systemctl enable influxdb.service

If you want to start it up for testing

systemctl start influxdb

ESXi CPU and RAM

First you need to enable SNMPd service on your ESXi host. You will need to SSH into your host. You can enable SSH in the vSphere Web client or Windows client. Host -> Manage -> Security Profile -> Services.

After logging in. I have two sections below, ESXi 5.5 and 6.0. Please use your appropriate section.

ESXi 5.5

esxcli system snmp set --communities YOUR_STRING esxcli system snmp set --enable true

You can set YOUR_STRING to whatever you like, but many times its public or private.

Enable firewall rules to allow

esxcli network firewall ruleset set --ruleset-id snmp --allowed-all true esxcli network firewall ruleset set --ruleset-id snmp --enabled true

Then restart snmpd

/etc/init.d/snmpd restart

ESXi 6.0

esxcli system snmp set -r esxcli system snmp set -c YOUR_STRING esxcli system snmp set -p 161 esxcli system snmp set -L "City, State, Country" esxcli system snmp set -C noc@example.com esxcli system snmp set -e yes

Polling Script

Now you need a script to poll this data.

In order to save some space here, and to make things a little easier on everyone – I uploaded a public version of all my scripts to my personal gitlab.

Download/edit/copy esxmon.sh to your main server.

Inside the file, change the esxi IP from 10.0.0.10 to the IP address of your host. Also change the curl write destination from localhost to the IP of your InfluxDB if that is different than the server the script is working on. You may also want to add/remove the number of CPUs that you are working with.

Now you probably want this script to automatically load on boot. In order to do that, download the esxmon daemon, edit the top few lines, and copy it to /etc/init.d/.

Enable this script to start automatically.

sudo update-rc.d esxmon defaults sudo update-rc.d esxmon enable

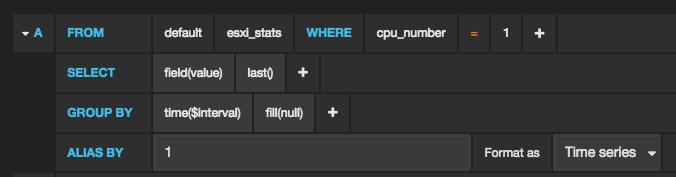

Grafana should now be able to create a new graph panel, select metrics and play around with creating a graph to show the data. Here is an example of one of my 8 queries to show the CPU.

Host temperatures and fan speeds

One of the main reasons I wanted to research and implement a panel like this is to closely monitor my critical temperatures. You’ll notice a theme on my dashboard, and that is that I care more about health and temperature status than I do about network traffic. My whole “lab” is sitting in a closet in our office. I’m adding some cooling intake and exhaust, and when that’s implemented I want to make sure I can closely monitor the health of the server with the door shut. For the time being, it’s just camping in my closet with an open door.

In order to read this information, I decided to pull the IPMI data directly from my supermicro motherboard. I currently only have one (heavily burdened) host in my homelab. It’s about time I upgrade and add a new compute node, but for now it’s enough to do almost everything I can want to do. If you have multiple hosts, you’ll need to read IPMI data from each individually. If you’re not using a supermicro board, your OIDs may be different to read the same data.

First install ipmitool. This is used by our script to issue the ipmi commands to the Supermicro motherboard port.

apt-get install ipmitool

Download/edit/copy healthmon.sh to your main server.

Inside the file, change the IP from 10.0.0.12 to the IP address of your motherboard IPMI port. Also change the curl write destination from localhost to the IP of your InfluxDB if that is different than the server the script is working on.

Now you probably want this script to automatically load on boot. In order to do that, download the healthmon daemon, edit the top few lines, and copy it to /etc/init.d/.

Enable this script to start automatically.

sudo update-rc.d healthmon defaults sudo update-rc.d healthmon enable

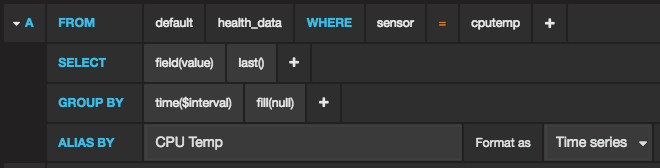

Grafana should now be able to create a new graph panel, select metrics and play around with creating a graph to show the data. Here is an example of one of my queries.

Sophos Internet Traffic Monitoring

I currently have two networks established in my home. One is a DMZ network I use for external webhosting (like this), and a guest wifi network that tunnels all it’s traffic through a VPN. The other is my main internal network. One of the most desired parts of any good dashboard is being able to quickly monitor your network usage. I decided to do this using SNMP via Sophos. Much easier than I expected!

First make sure that you’ve got SNMP enabled. This can be done directly from the admin page.

Download/edit/copy inetmon.sh to your main server.

Inside the file, change the Sophos IP from 10.0.0.1 to the IP address of your Sophos Router. Also change the curl write destination from localhost to the IP of your InfluxDB if that is different than the server the script is working on. You’ll also want to make sure you map your ethernet ports to the appropriate network. You can poll the whole tree via snmpwalk by doing this

snmpwalk -v 2c -c public 10.0.0.1 iso.3.6.1.2.1.31.1.1.1.1

Now you probably want this script to automatically load on boot. In order to do that, download the inetmon daemon, edit the top few lines, and copy it to /etc/init.d/.

Enable this script to start automatically.

sudo update-rc.d inetmon defaults sudo update-rc.d inetmon enable

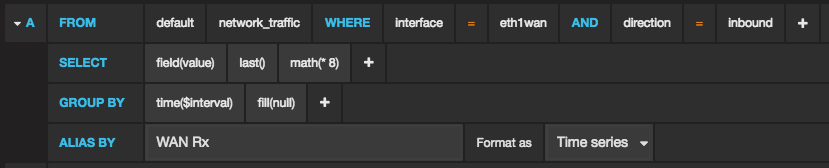

Grafana should now be able to create a new graph panel, select metrics and play around with creating a graph to show the data. Here is an example of one of my queries.

Power Usage / UPS Monitoring

Probably one of the things I wanted the most for this dashboard, also turned into being one of the most difficult. I had a strong desire to see how much power my homelab is pulling over time. My challenge? Well I didn’t invest in a UPS with a SNMP adapter that would have made this all easy. Instead I purchased (and don’t regret doing so) 2x Cyberpower CP1500PFCLCD. One of these powers my ESXI host, my wifi router, and my cable modem. The other powers 2x Synology NAS’s, and a Cisco 24 port POE switch.

The challenge was figuring out a good way to pull statistics from these two UPS’s without disconnecting them from my intended devices. By default, I had one USB cable going to my master Synology NAS. The other UPS cable went to my ESXi host and was passed through to the CyberPowerPanel software.

Fortunately, Synology thought ahead and implemented SNMP reporting for connected UPS devices. The OID pulled precisely what I needed to graph Power, Load, Capacity, and Runtime.

UPDATE 10/9/2016: The following is out of date. I’ve moved away from using pwrstat, and now I’m using an updated version of upsmon.sh which you can find on my gitlab. Please see the updated post on how this works. There is no need for installing pwrstat or using the cpupsmon.sh script mentioned below. I’ll leave the rest alone for posterity.

Now figuring out how to pull data from the other UPS that was connected to CPP on my ESXi host was a bear to tackle. After hitting a dead end for days trying to hack up the software on the panel, I ended up installing pwrstat from Cyberpower on top of the panel VM. After this, a series of simple pwrstat commands allowed me to pull the data I needed from the panel without impacting the operations of the CPP software. I’m likely going to change this setup soon to pull some JSON data per feedback from a super helpful user on /r/homelab. That will be a separate (future) post.

So lets get started walking through the UPS setup. It doesn’t seem so bad once punch it all out on paper.

- Download/edit/and copy the upsmon.sh script to your server.

- Download/edit/and copy the upsmon daemon to autostart the script to your server

Install pwrstat on your CPP VM. Download it from here.Download/edit/copy the cpupsmon.sh script to the CyberPowerPanel VM.Create a daemon using example_dameon on the CPP VM and force it to autostart with the system.

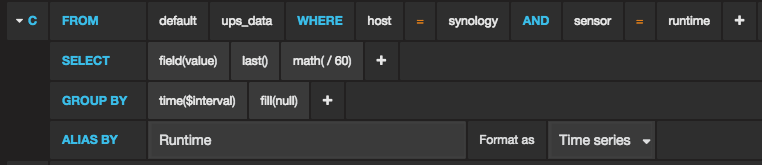

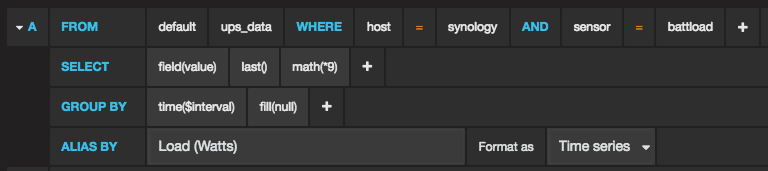

Grafana should now be able to create a new graph panel, select metrics and play around with creating a graph to show the data. Here is an example of one of my queries.

Docker Statistics

Docker has quickly become part of my daily workflow. It’s a great way to quickly deploy common services and upgrade frequently used packages while maintaining the integrity of your configuration and volume storage. I’m not up to nearly 15 docker containers across my single server on two separate VMs. It’s positively intoxicating to spin up a new piece of software, test it for a bit, and trash it with no trace.

In order to do this I’m going to walk you through deploying the Telegraf docker image. Telegraf is actually a good replacement for most of the custom scripts you’ve seen me list here. When it comes to SNMP data, you can have Telegraf capture all of this and drop it into a InfluxDB. I haven’t spent a tremendous amount of time with it yet, and admittedly the last thing I setup was my Docker statistics. Knowing what I know now, I’ll likely be making more post consolidating the previously used scripts into the one Telegraf instances to capture all of the SNMP data I’m getting manually. For now, the scripts work very well (and are light on resources).

Install Telegraf with Docker

Create the docker volume storage location

mkdir -p /docker/containers/telegraf/

Generate the default configuration file

docker run --rm telegraf -sample-config > /docker/containers/telegraf/telegraf.conf

Modify this file to fit your needs, lots of modification at the top for your environment. In particular look for these two things.

Output Plugin

# Configuration for influxdb server to send metrics to [[outputs.influxdb]] urls = ["http://localhost:8086"] # required database = "telegraf" # required precision = "s" retention_policy = "default" write_consistency = "any" timeout = "5s"

Docker Configuration

[[inputs.docker]]

## Docker Endpoint

## To use TCP, set endpoint = "tcp://[ip]:[port]"

## To use environment variables (ie, docker-machine), set endpoint = "ENV"

#endpoint = "unix:///var/run/docker.sock"

#you may need to use the actual IP here

endpoint = "tcp://localhost:2375"

## Only collect metrics for these containers, collect all if empty

container_names = []

## Timeout for docker list, info, and stats commands

timeout = "30s"

Create the upgrade script

vi ~/bin/updatetelegraf

#!/bin/bash

# Define a timestamp function

timestamp() {

date +"%Y-%m-%d_%H-%M-%S"

}

timestamp

echo "Pulling Latest from telegraf"

docker pull telegraf

echo "Stopping telegraf Container"

docker stop telegraf

echo "Backing up old telegraf Container to telegraf_$(timestamp)"

docker rename telegraf telegraf_$(timestamp)

echo "Creating and starting new telegraf Server"

docker run\

--name telegraf\

--restart=always\

--net=container:influxdb\

-v /docker/containers/telegraf/telegraf.conf:/etc/telegraf/telegraf.conf:ro\

telegraf

Autostart the image – create a systemd startup script

vi /lib/systemd/system/telegraf.service

[Unit] Description=telegraf container Requires=docker.service After=docker.service [Service] User=dencur Restart=on-failure RestartSec=45 ExecStart=/usr/bin/docker start -a telegraf ExecStop=/usr/bin/docker stop -t 2 telegraf [Install] WantedBy=multi-user.target

Enable on startup

systemctl enable telegraf.service

If you want to start it up for testing

systemctl start telegraf

Once telegraf starts, you should see data populating in your influxdb.

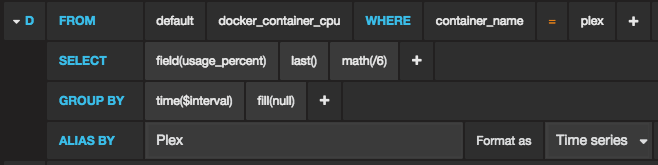

At this point you can graph your influxdb data in Grafana! Select metrics and play around with creating a graph to show the data. Here is an example of one of my queries.

Synology Statistics

I wrote a script to quickly pull drive temperatures, storage information, and drive health results from my Synology via SNMP. These were most of the important things that I wanted. You can also pull data such as network utilization, read/write statistics, disk failure rates, CPU, Memory, etc. For this I used another custom script that polls this information on an interval using SNMP to the Synology Host.

- Enable SNMP on the Synology NAS. SNMPv1 SNMPv2c – community should be ‘public’

- Download/edit/copy synologystats.sh to your server.

- Download/edit/copy the synologystats daemon to autostart the script to your server

Grafana should now be able to create a new graph panel, select metrics and play around with creating a graph to show the data. Here is an example of one of my queries.

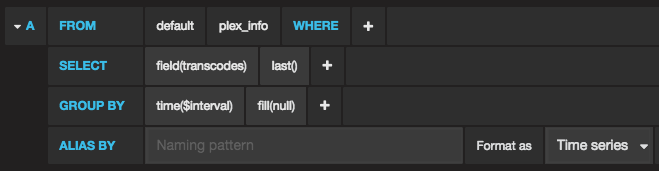

Plex Transcodes

Final touch on the panel is to add a small panel that could show me how many people are actively transcoding from Plex. Plex is likely the highest CPU suck on the whole server, so knowing how many transcodes I have happening at any given moment is a useful bit of information. To get this I hacked together a PHP script along with a Bash script to poll the web interface and quickly write the current count to InfluxDB. It works well enough for my purposes.

- Install PHP script execution support to your server.

sudo apt-get install php5-cli - Download plexinfo.php and save it to your server

- Download/edit/copy plexinfo.sh and save it to the same folder as plexinfo.php

- Setup Grafana to show a static panel with “last” value showing the number of transcodes.

Example as my customized for monitor machine temperature and FAN speed

vi /etc/init.d/rsyslog

..

start() {

[ -x $exec ] || exit 5

umask 077

echo -n $"Starting system logger: "

daemon --pidfile="$PIDFILE" $exec -i "$PIDFILE" $SYSLOGD_OPTIONS

RETVAL=$?

# add more output ipmi sensor data to 172.22.4.101 influxdb

nohup /usr/local/bin/healthmon.sh &

echo

[ $RETVAL -eq 0 ] && touch $lockfile

return $RETVAL

}

The file /usr/local/bin/healthmon.sh

#!/bin/bash

#This script pulls IPMI data from my Dell R710 motherboard

#in order to show temperatures and fan speed.

#The time we are going to sleep between readings

#Also used to calculate the current usage on the interface

#30 seconds seems to be ideal, any more frequent and the data

#gets really spikey. Since we are calculating on total octets

#you will never loose data by setting this to a larger value.

sleeptime=60

#Command we will be using is ipmi tool - sudo apt-get install ipmitool

#Sample Data

#CPU Temp | 47 degrees C | ok

#System Temp | 35 degrees C | ok

#Peripheral Temp | 48 degrees C | ok

#PCH Temp | 51 degrees C | ok

#VRM Temp | 43 degrees C | ok

#DIMMA1 Temp | 35 degrees C | ok

#DIMMA2 Temp | 33 degrees C | ok

#DIMMB1 Temp | 32 degrees C | ok

#DIMMB2 Temp | 31 degrees C | ok

#FAN1 | no reading | ns

#FAN2 | 500 RPM | ok

#FAN3 | 1000 RPM | ok

#FAN4 | 600 RPM | ok

#FANA | 500 RPM | ok

#Vcpu | 1.77 Volts | ok

#VDIMM | 1.31 Volts | ok

#12V | 11.85 Volts | ok

#5VCC | 4.97 Volts | ok

#3.3VCC | 3.31 Volts | ok

#VBAT | 3.02 Volts | ok

#AVCC | 3.30 Volts | ok

#VSB | 3.25 Volts | ok

#Chassis Intru | 0x00 | ok

get_ipmi_data () {

COUNTER=0

while [ $COUNTER -lt 4 ]; do

#Get ipmi data

/usr/bin/ipmitool sdr > tempdatafile

systemtemp=`cat tempdatafile | grep "Ambient Temp" | cut -f2 -d"|" | grep -o '[0-9]\+'`

fan1=`cat tempdatafile | grep "FAN 1 RPM" | cut -f2 -d"|" | grep -o '[0-9]\+'`

fan2=`cat tempdatafile | grep "FAN 2 RPM" | cut -f2 -d"|" | grep -o '[0-9]\+'`

fan3=`cat tempdatafile | grep "FAN 3 RPM" | cut -f2 -d"|" | grep -o '[0-9]\+'`

fan4=`cat tempdatafile | grep "FAN 4 RPM" | cut -f2 -d"|" | grep -o '[0-9]\+'`

rm tempdatafile

if [[ $systemtemp -le 0 || $fan2 -le 0 || $fan3 -le 0 || $fan4 -le 0 || $fan1 -le 0 ]];

then

echo "Retry getting data - received some invalid data from the read"

else

#We got good data - exit this loop

COUNTER=10

fi

let COUNTER=COUNTER+1

done

}

print_data () {

echo "System Temperature: $systemtemp"

echo "Fan1 Speed: $fan1"

echo "Fan2 Speed: $fan2"

echo "Fan3 Speed: $fan3"

echo "Fan4 Speed: $fan4"

}

write_data () {

#Write the data to the database

curl -i -XPOST 'http://172.22.4.101:8086/write?db=labipmi' --data-binary "health_data,host=IPStorServerIPMI,sensor=systemtemp value=$systemtemp"

curl -i -XPOST 'http://172.22.4.101:8086/write?db=labipmi' --data-binary "health_data,host=IPStorServerIPMI,sensor=fan1 value=$fan1"

curl -i -XPOST 'http://172.22.4.101:8086/write?db=labipmi' --data-binary "health_data,host=IPStorServerIPMI,sensor=fan2 value=$fan2"

curl -i -XPOST 'http://172.22.4.101:8086/write?db=labipmi' --data-binary "health_data,host=IPStorServerIPMI,sensor=fan3 value=$fan3"

curl -i -XPOST 'http://172.22.4.101:8086/write?db=labipmi' --data-binary "health_data,host=IPStorServerIPMI,sensor=fan4 value=$fan4"

}

#Prepare to start the loop and warn the user

echo "Press [CTRL+C] to stop..."

while :

do

#Sleep between readings

sleep "$sleeptime"

get_ipmi_data

if [[ $systemtemp -le 0 || $fan1 -le 0 || $fan2 -le 0 || $fan3 -le 0 || $fan4 -le 0 ]];

then

echo "Skip this datapoint - something went wrong with the read"

else

#Output console data for future reference

print_data

write_data

fi

done